Actionable AI Ethics #5: Content moderation systems, transparency on AI ethics methodology, and more ...

How many more principle sets do we need before we start moving to action?

Welcome to this week’s edition of the newsletter!

What I am thinking:

Get transparent about your AI ethics methodology

What I am reading:

Algorithmic content moderation: Technical and political challenges in the automation of platform governance

Responsible AI #AIForAll : Approach Document for India - Part 1: Principles for Responsible AI

AI Ethics Tool of the Week:

Watch this space in the following weeks for a tool that I think is going to be particularly useful for your ML workflow. If you know of a tool that you think should be shared with the community, feel free to hit reply to this email or reach out to me through one of the ways listed here.

If this newsletter has been forwarded to you, you can get your own copy by clicking on the following button:

Howdy! Great to see you back here - this week has been extremely busy but at this point that can be said for all weeks right?!

Since last week, I spoke at Harvard Business School on “Technology and Social Justice” where we got into very interesting discussions on both some of the technical tradeoffs that we need to make when implementing responsible AI and how organizational decision-making needs to be structured to effectively operationalize AI ethics. This drew a bit from the article that I penned on LinkedIn which you can find a little lower here in the newsletter.

I also had an opportunity to work with the United Nations Office for Disarmament Affairs and National University of Singapore, Nanyang Technical University, and Singapore University of Technology and Design on the subject of responsible innovation and helping students in the RISE program better understand some of the ethical considerations of their technical solutions.

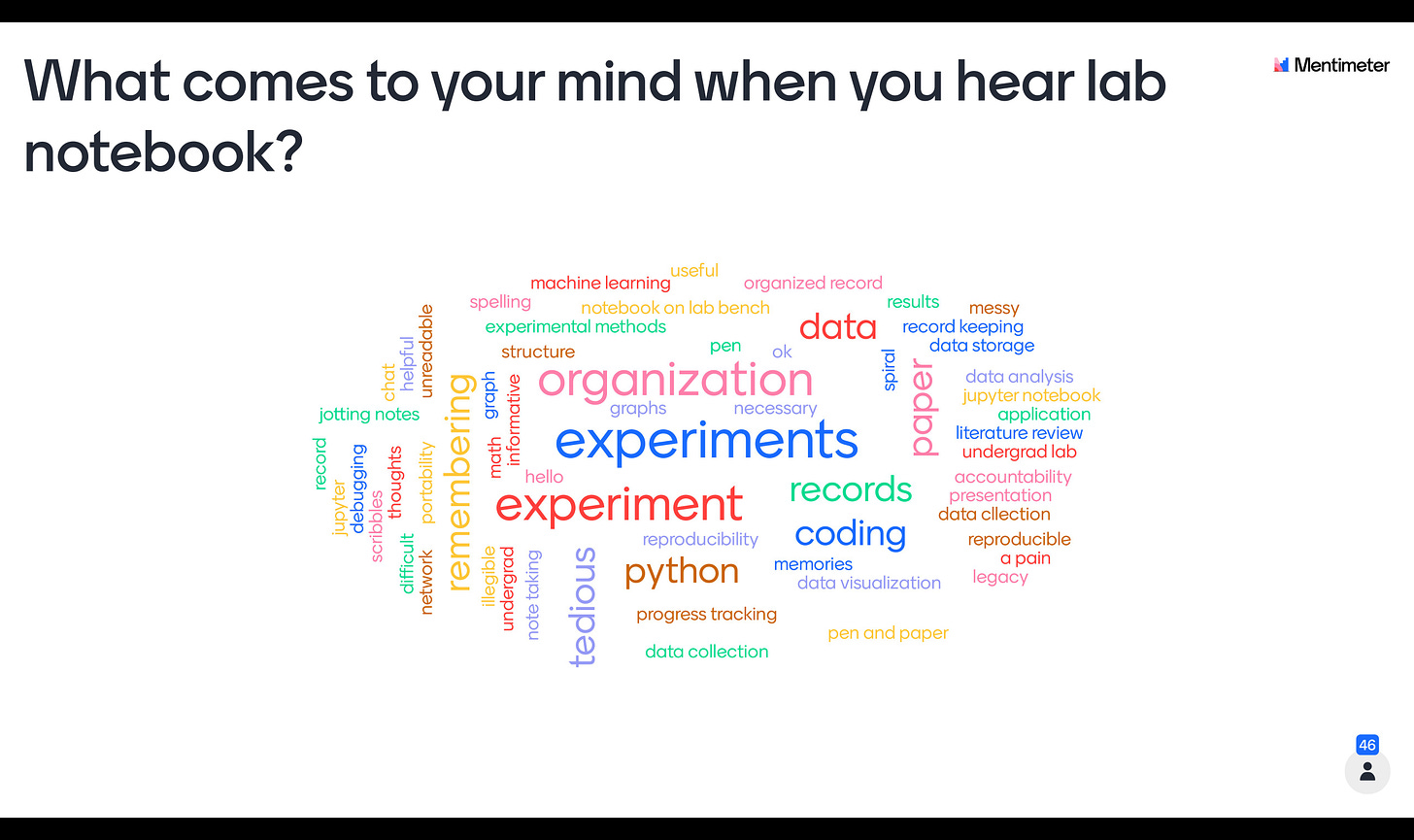

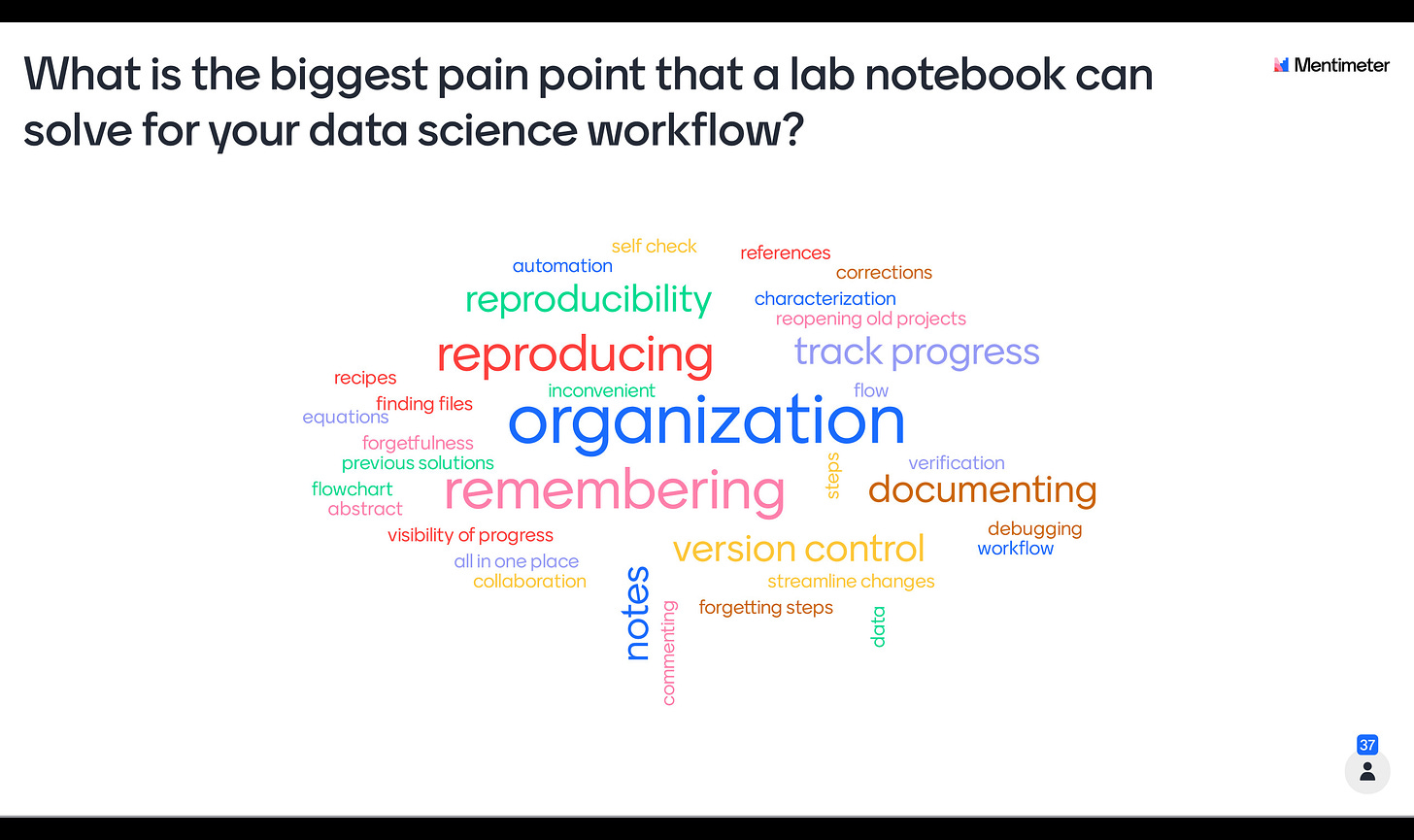

If you read the last edition of the newsletter, you’ll remember that I mentioned a new idea that I was going to present this week at York University, some of you emailed me and had a chance to get a sneak peek at it and shared some thoughts as well, so thank you! I wanted to share some results on that idea from the participants at the presentation for all of you here:

And good news - Chapter 3 has now been submitted and is going through the editing process right now!

Chapter 4 is also getting close to being done and will be focused on privacy-preserving machine learning. A lot of people get confused about what differential privacy means and how it works in practice which is something that I touch on in that chapter. If there are other concepts in this category, please feel free to hit reply to this email or leave a comment and I’d be happy to explicate and include that in the chapter as well!

What I am thinking

Get transparent about your AI ethics methodology

So you've heard about AI ethics and its importance in building AI systems that are aligned with societal values. There might be many reasons why you choose to embark on this journey of incorporating responsible AI principles into your design, development, and deployment phase. But, we've talked about those before here and you'll find tons of literature elsewhere that articulates why you should be doing it.

I want to take a few moments and talk about how you should be doing it. I want to zero in on one aspect of that: transparency on your AI ethics methodology.

What I am reading

The paper provides a comprehensive overview of the existing content moderation practices and some of the basic terminology associated with this domain. It also goes into detail on the pros and cons of different approaches and the difficulties that continue to be present in the field despite the introduction of automated content moderation. Finally, it shares some of the future directions that are worthy of our attention to come up with even more effective content moderation approaches.

Responsible AI #AIForAll : Approach Document for India - Part 1: Principles for Responsible AI

Given the release of the national strategy for AI from the Indian Government a couple of years ago, this paper articulates principles for building Responsible AI systems. It takes an approach that is rooted in the Indian Constitution to link the principles to concrete segments of the law that provide a firm mandate for the adoption of these principles. It draws from similar efforts from around the globe while ensuring that the principles are somewhat tailored to the Indian context.

AI Ethics Tool of the Week

Watch this space the following week for a tool that I think is going to be particularly useful for your ML workflow. If you know of a tool that you think should be shared with the community, feel free to hit reply to this email or reach out to me through one of the ways listed here.

These are weekly musings on the technical applications of AI ethics. A word of caution that these are fluid explorations as I discover new methodologies and ideas to make AI ethics more actionable and as such will evolve over time. Hopefully you’ll find the nuggets of information here useful to move you and your team from theory to practice.

You can support my work by buying me a coffee! It goes a long way in helping me create great content every week.

If something piques your interest, please do leave a comment and let’s engage in a discussion:

Did you find the content from this edition useful? If so, share this with your colleagues to give them a chance to engage with AI ethics in a more actionable manner!

Finally, before you go, this newsletter is a supplement to the book Actionable AI Ethics (Manning Publications) - make sure to grab a copy and learn more from the website for the book!